Hybrid machine learning solution with Google Colab

Google Colab is an excellent free resource provided by Google to use GPUs or TPUs for training your own machine learning models or performing quantitative research. Personally, I have a hybrid solution of performing EDA on the dataset, feature engineering, before transitioning to Google Colab for model selection and hyper parameter tuning.

Google Colab really is a game changer as basically anyone with a cheap laptop and an internet connection can have access to some of the most powerful processors for some heavy duty number-crunching, whether that's optimising a trading model or training a machine learning model to identify images, or performing natural language processing on a ton of websites or documents. Of course, what they are hoping for is that quantitative finance professionals, data scientists, and academics will eventually opt for using the Google Cloud Platform if they need even more processing power. But ultimately, all of these free resources does democratize programming and machine learning etc., so we're all better off for it.

With Colab, you can request GPUs (NVIDIA Tesla K80 GPU) and TPUs and use them for a maximum of 12 hours.

Hybrid cloud workflow for Python¶

Sharing files between ChromeOS and Crostini (Linux container)¶

- Using the

Filesapp of ChromeOS, create a folder calledColab Notebooks. This is where we can store any of our iPython Notebooks and Python scripts (e.g.,ipynb,py). - Create a subfolder within

Colab Notebookscalleddatasets. This is where our datasets are stored. - Create a subfolder within

Colab Notebookscalledmodules. This is where our personal Python modules are stored.

.

└── Colab Notebooks

├── datasets

└── modules

- In the

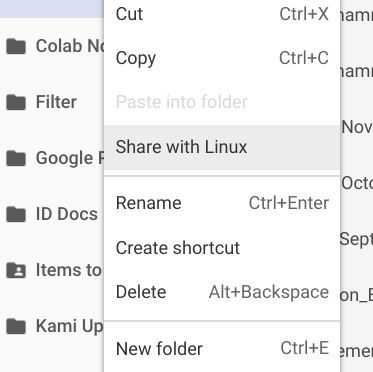

Filesapp, right-click theColab Notebooksfolder and selectShare with Linux. You can now access this folder in the/mnt/chromeos/directory in Crostini.

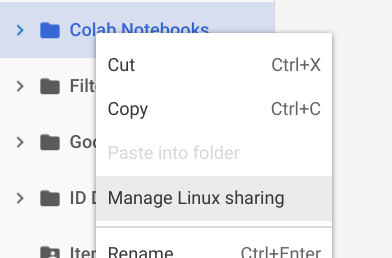

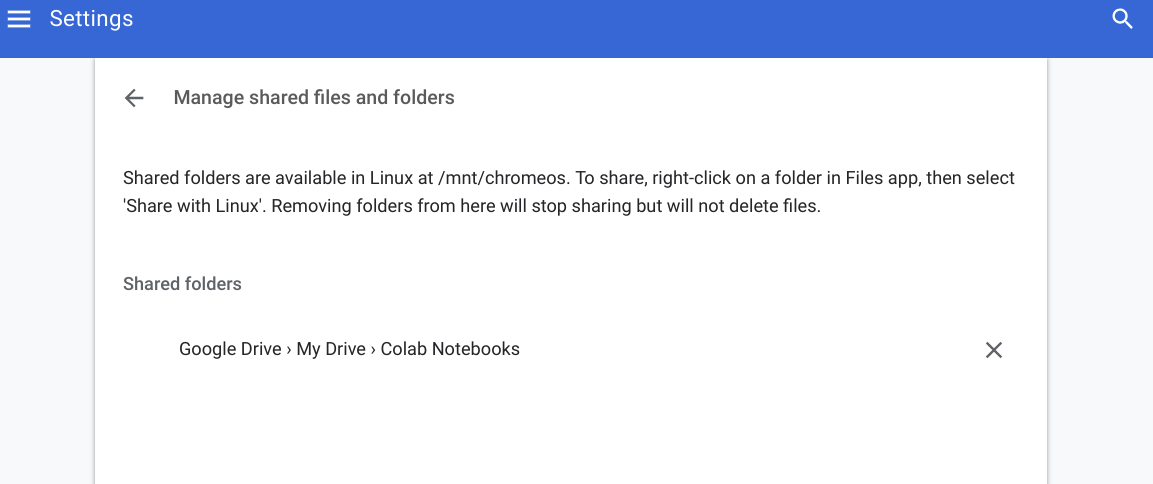

- To stop sharing any folders/files from being shared with Linux, enter the

Settingsin ChromeOS and select theManage shared files and folders. You can remove any folders/files that are being shared between both systems from here.

Code: Working locally and in Colab¶

- GitHub:

- Code that is stored in a GitHub public repository can be directly pulled.

- Google Drive:

- Copy your iPython notebook or Python script into the

Colab Notebooksdirectly. - Run the

nbconvertcommand to output Python script into the shared folder as shown below

- Copy your iPython notebook or Python script into the

!jupyter nbconvert ml_kaggle-home-loan-credit-risk-model-logit.ipynb --to script --output-dir='/mnt/chromeos/GoogleDrive/MyDrive/Colab Notebooks`

Datasets: Working locally and in Colab¶

As the folder \Colab Notebooks\datasets is in Google Drive, it can be accessed locally on your Chromebook and also from CoLab

Mount Google Drive to your Colab runtime instance¶

from google.colab import drive

drive.mount('/content/gdrive')

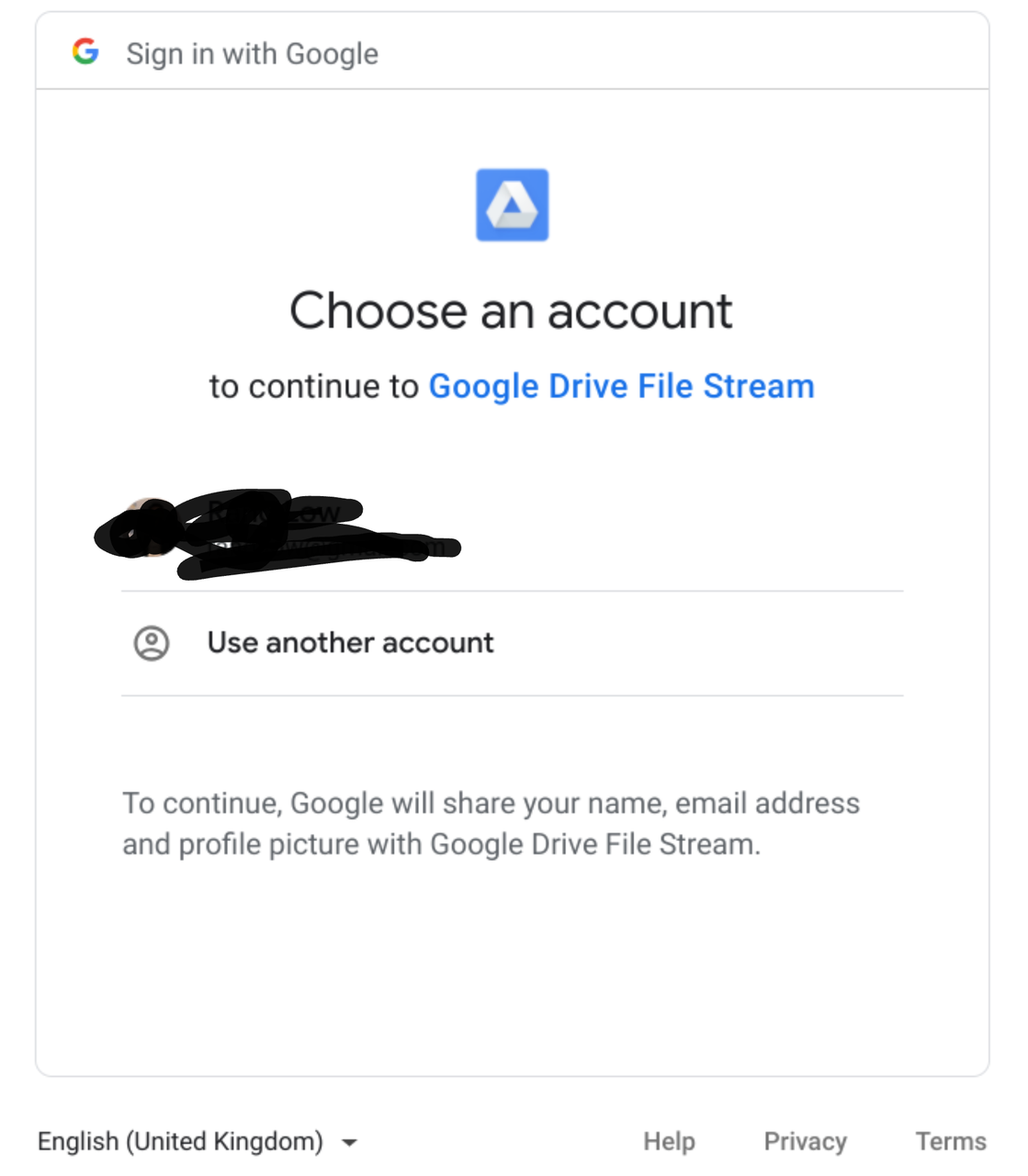

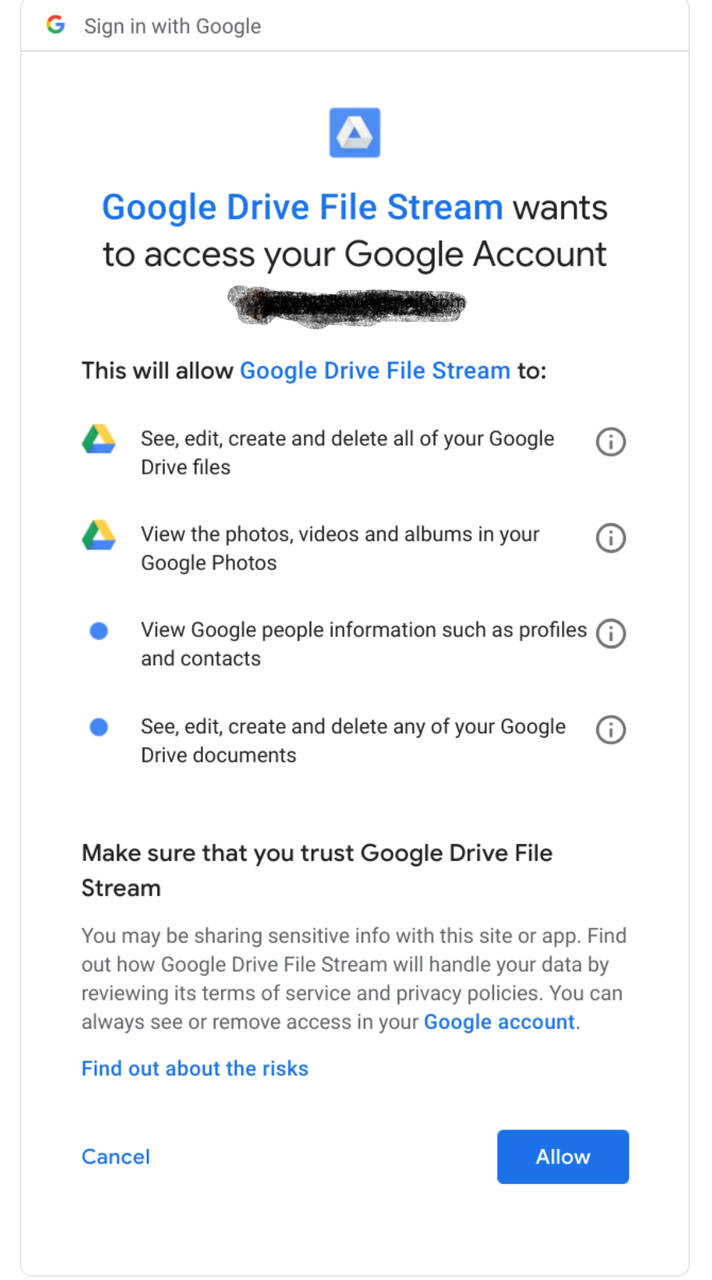

Click the link provided to you, and a new tab will open requesting that you select a Google Account to access Google Drives with.

Upon selecting an account, you need to authorize Google Cloud to interface with Google Drives.

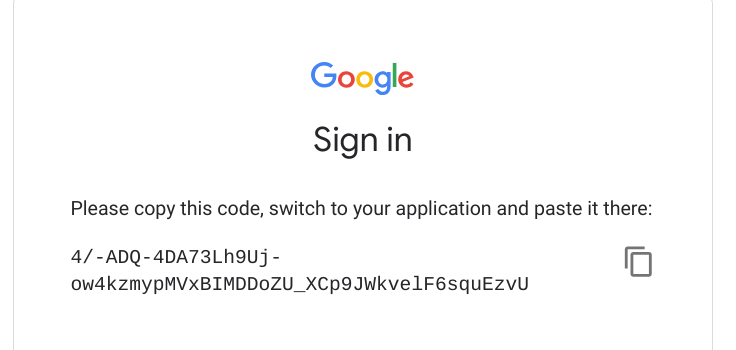

Once you've authorized the action, you will be brought to another page that has a token. This token needs to be copied and pasted in our Colab. At that point, you will be able to access all files in your Google Drive from your runtime instance.

Loading your datasets into Google Colab¶

As the Google Drive is successfully mounted, we go into the directory where our files are stored. In my specific instance, my datasets are stored in Colab Notebooks/datasets/kaggle/, and I am using pickle to extract datasets that I have stored earlier. Obviously you can use pd.read_csv if you have csv files in your Google Drive since it is now mounted on your runtime instance.

import pickleshare

inputDir = "/content/gdrive/My Drive/Colab Notebooks/datasets/kaggle/home-credit-default-risk"

storeDir = inputDir+'/pickleshare'

db = pickleshare.PickleShareDB(storeDir)

print(db.keys())

Loading your Python modules into your Colab runtime instance¶

The easiest way is to use the sys module and append the module directory or the directory where all your notebooks and scripts are stored in your Google Drive

import sys

sys.path.append('/content/gdrive/My Drive/Colab Notebooks/module') # modules

sys.path.append('/content/gdrive/My Drive/Colab Notebooks') # colab

Now you can load in your own modules easily!

import rand_eda as eda

Conclusion¶

There you go! Now you know how to have a hybrid workflow to combine working on Python locally on your machine and also on Colab! You've learnt how to perform the following:

- Share folders/files between Google Drive in ChromeOS and Crostini (Linux Container)

- Mount Google Drives into your Colab run instance

- Append a directory that stores your own Python modules into the Python path

Comments

Comments powered by Disqus